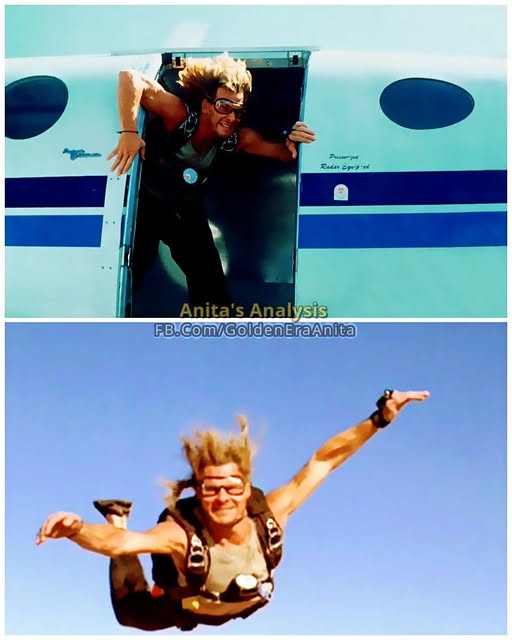

Patrick Swayze jumped out of a plane without a stunt double over 50 times during the filming of “Point Break” (1991). He insisted on it. Not for spectacle, but for truth.

Director Kathryn Bigelow didn’t originally have Swayze in mind for the role of Bodhi. The studio had expected a grittier action type, someone who matched the sharp edges of Keanu Reeves’ undercover FBI agent Johnny Utah. Swayze, known more for romantic charisma in “Dirty Dancing” (1987) and emotional depth in “Ghost” (1990), was considered too polished. But he saw something in the script no one else did. Bodhi wasn’t just a surfer or a criminal. He was a seeker. A man chasing freedom even if it meant self-destruction.

He flew himself to Bigelow’s office in a helicopter to pitch his vision of the character. He wasn’t selling himself as an action hero. He was offering a philosophy: Bodhi wasn’t acting out rebellion. He believed in it. Swayze’s conviction caught Bigelow’s attention, and the studio agreed.

Bodhi’s spiritual radicalism wasn’t accidental. Swayze built it from fragments of his own worldview. Raised in Texas under the discipline of his mother’s ballet studio, he knew what it meant to crave motion and freedom. Surfing, skydiving, martial arts, he trained for all of it. And when production started, he didn’t fake anything.

He surfed until the saltwater blurred his vision.

While most actors let doubles handle high-risk shots, Swayze refused. During a key mid-air sequence, Bodhi leaps from a plane without a parachute. Swayze performed that jump himself, again and again. The production eventually had to ask him to stop, worried he would get injured before the film wrapped.

It wasn’t recklessness. It was trust, in the role, in the team, in the film’s pulse. He later said that the adrenaline was only part of it. The real thrill was telling a story that meant something. Bodhi’s code wasn’t empty dialogue. Swayze wanted the audience to feel what Bodhi felt when he paddled out to sea, knowing he wouldn’t return.

He trained in secret to make Bodhi’s fights unpredictable.

The beach fight sequence wasn’t choreographed for standard movie violence. Swayze pushed for fluidity, drawing from his dance background to add rhythm and improvisation. He even trained separately in Brazilian jiu-jitsu and aikido to make Bodhi’s moves look like natural extensions of his beliefs. Each motion was grounded in control rather than aggression.

One of the crew members later revealed that Swayze spent nights editing his own performance tapes, fine-tuning how Bodhi breathed, blinked, and stared at the horizon. That attention to stillness made Bodhi unsettling. He wasn’t out of control. He was calm. Even in the final moments on the beach in Australia, when Utah lets him paddle into the deadly storm, Bodhi’s stillness felt earned.

He rewrote several of Bodhi’s monologues by hand.

The original script had Bodhi delivering heavier exposition, but Swayze pared them down. He believed Bodhi would speak less and feel more. He trimmed the lines, simplified the philosophy, and brought a quiet intensity that made the character magnetic. Bodhi’s lines stuck not because they were loud, but because they were spare and honest.

That creative gamble turned “Point Break” into a different kind of action film. It didn’t chase explosions. It chased meaning. And audiences noticed. The film wasn’t a massive box office hit at first, but it refused to fade. By the early 2000s, it had grown into a cultural landmark. Directors cited it. Actors studied it. Surfers quoted it.

And Patrick Swayze’s Bodhi stood at the center, not because he shouted, but because he believed.

He gave Bodhi soul. He gave action cinema a heartbeat.